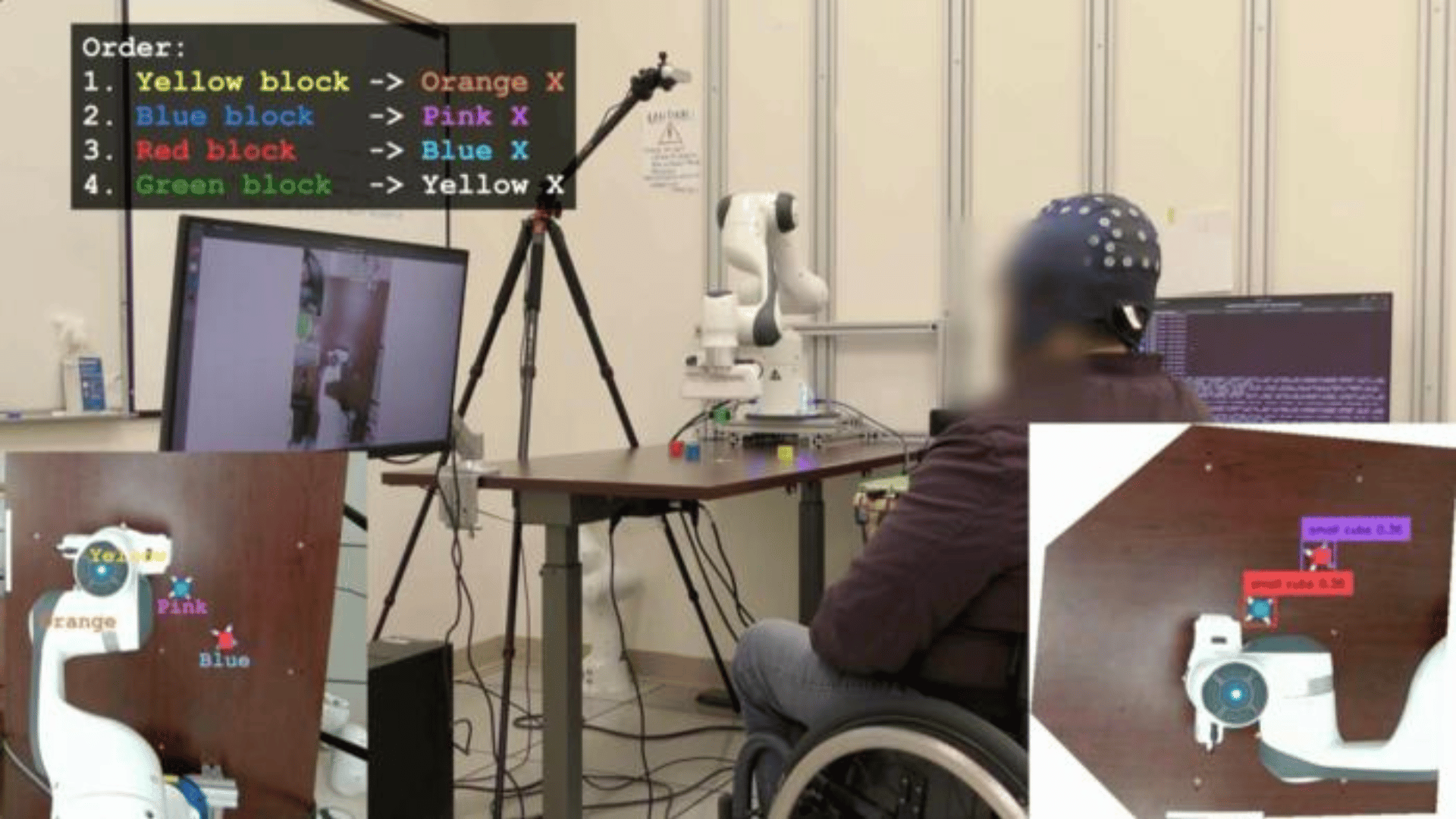

UCLA engineers are creating a world where someone with paralysis could wear a cap that allows them to control a robotic arm using only their thoughts. The engineering team developed a new brain-computer interface (BCI) that uses AI to help people complete tasks quickly and accurately.

According to the engineers, this new brain chip technology could lead to a range of technologies that help people with limited capabilities, such as paralysis and neurological disorders, move objects easily and precisely.

Researchers detailed their findings in the journal Nature Machine Intelligence.

According to the team, the groundbreaking technology is noninvasive, a major difference from some existing BCI systems requiring brain surgery. UCLA’s system uses a regular electroencephalogram (EEG) cap to read the brain’s electrical signals. Then, the signals are translated into commands for a robotic arm or a computer cursor.

A New Way to Connect With Brain Chip Technology

Engineers say the brain chip system is special because of its AI co-pilot that works in tandem with the user. The AI observes the user’s intent and helps guide the cursor or arm to make the process faster and more accurate.

The study’s leader, Jonathn Kao, and his team call the collaboration between humans and AI a game-changer. “By using artificial intelligence to complement brain-computer interface systems, we’re aiming for much less risky and invasive avenues,” Kao, an associate professor of electrical and computer engineering at the UCLA Samueli School of Engineering, said. “Ultimately, we want to develop AI-BCI systems that offer shared autonomy, allowing people with movement disorders, such as paralysis or ALS, to regain some independence for everyday tasks.”

Advertisement

Researchers performed a key test with four participants, including one who is paralyzed from the waist down. The research team says the results were promising. According to the team, all participants completed tasks, including guiding a cursor to targets on a screen and moving blocks with a robotic arm. In addition, they say, it was significantly faster with AI’s help.

The paralyzed participant, however, couldn’t complete the robotic arm task independently but finished in about six and a half minutes with the AI’s assistance.

The AI uses computer vision to infer the user’s intent rather than strictly relying on eye movements.

“Next steps for AI-BCI systems could include the development of more advanced co-pilots that move robotic arms with more speed and precision, and offer a deft touch that adapts to the object the user wants to grasp,” co-lead author and computer engineering doctoral candidate, Johannes Lee, said.

“And adding in larger-scale training data could also help the AI collaborate on more complex tasks, as well as improve EEG decoding itself,” Lee concluded.