Inspiration for robotics often comes from the natural world, but what’s less common is using robotics to learn about animals. Scientists from EPFL and Duke University have developed robotic zebrafish that mimic the reactions of real fish to visual cues and water flow.

Robotics Inspires Animal Research

The study explores how brain circuits, body mechanics, and the environment collectively control fish behavior. Whereas neuroscience often studies the brain in isolated lab settings, this approach accounts for the specific environments in which animals’ brains evolved.

“We can’t fully understand how environmental factors shape brain function without considering the body in which that brain evolved,” the researchers noted.

According to Interesting Engineering, EPFL’s BioRobotics Lab specializes in developing bioinspired robots. This study poses questions regarding how physical form impacts behavior and perception.

“Our simulated larval zebrafish provided virtual embodiment, which allowed us to observe its reaction to simulated fluid dynamics and visual scenes. Then, we used a physical robot to observe these interactions in the real world. These connections to the environment can’t be studied with an isolated brain in a lab,” explains BioRobotics Lab head Auke Ijspeert.

For this study, neurobiologist Eva Naumann and her team at Duke University provided a realistic neural network model inspired by live imaging of zebrafish brains. They also monitored how the fish behaved when exposed to shifting visual patterns meant to simulate moving water.

This data was used to generate a detailed simulation recreating the zebrafish’s visual processing, swimming reflexes, and spinal connections. The simulation could mimic the fish’s optomotor response or the automatic swimming that helps real fish resist currents.

Notably, the study revealed that the majority of neural signals responsible for the fish’s movement come from a small portion of the retina.

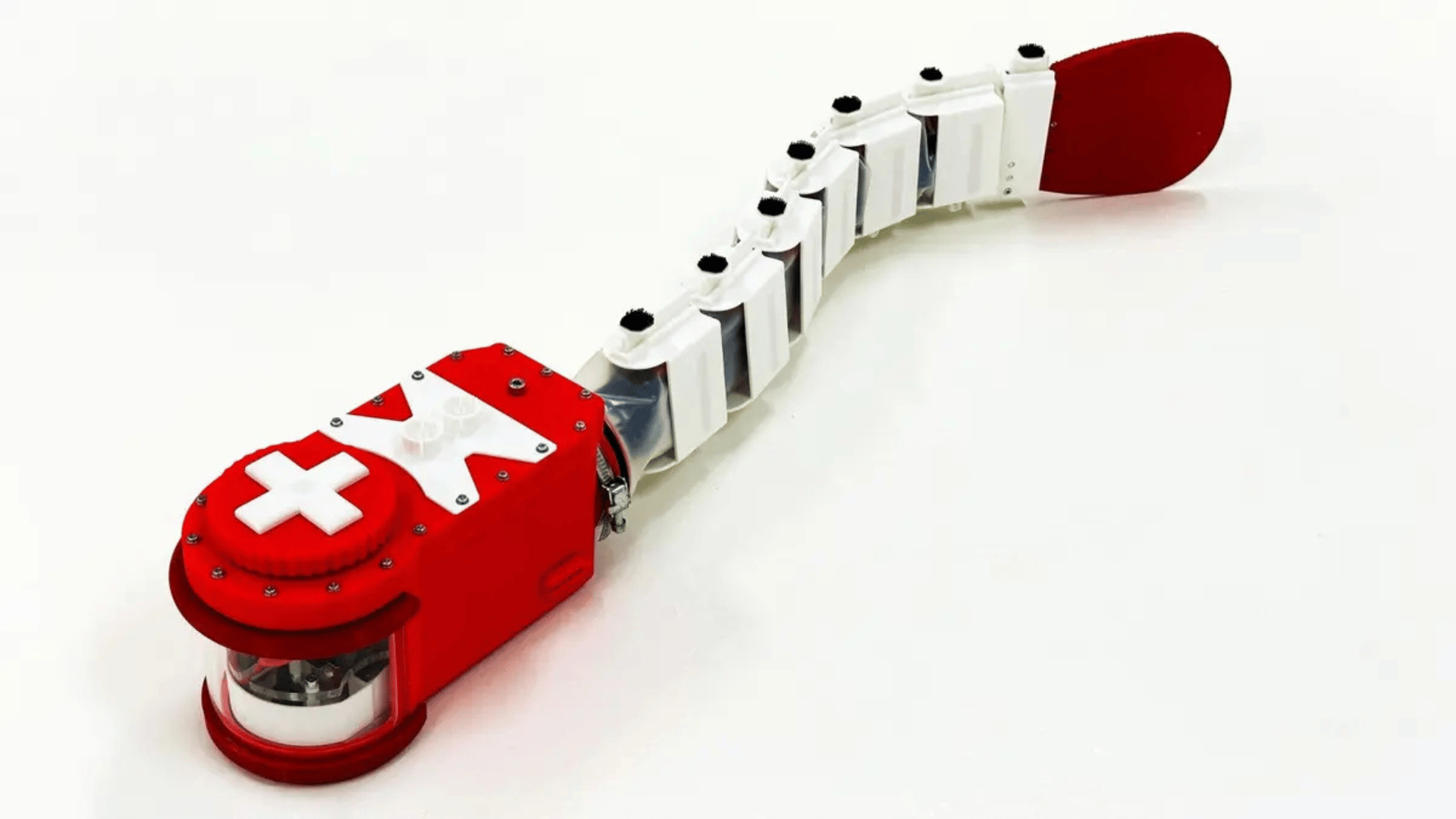

To test their model in the real world, EPFL postdoctoral researcher Xiangxiao Liu built an 80-centimeter robotic zebrafish larva equipped with two cameras for eyes, motors for its tail, and the same neural circuits used in the simulation. When released into Lausanne’s Chamberonne River, the robot could hold its position against the current, just like a real zebrafish.

“The emergence of the optomotor response from our neural circuitry is significant, as some of an animal’s response to any stimulus is random. Despite this randomness, the neural circuitry still converged to reorient the robot and maintain its position,” Liu said.

The findings were published in Science Robotics.