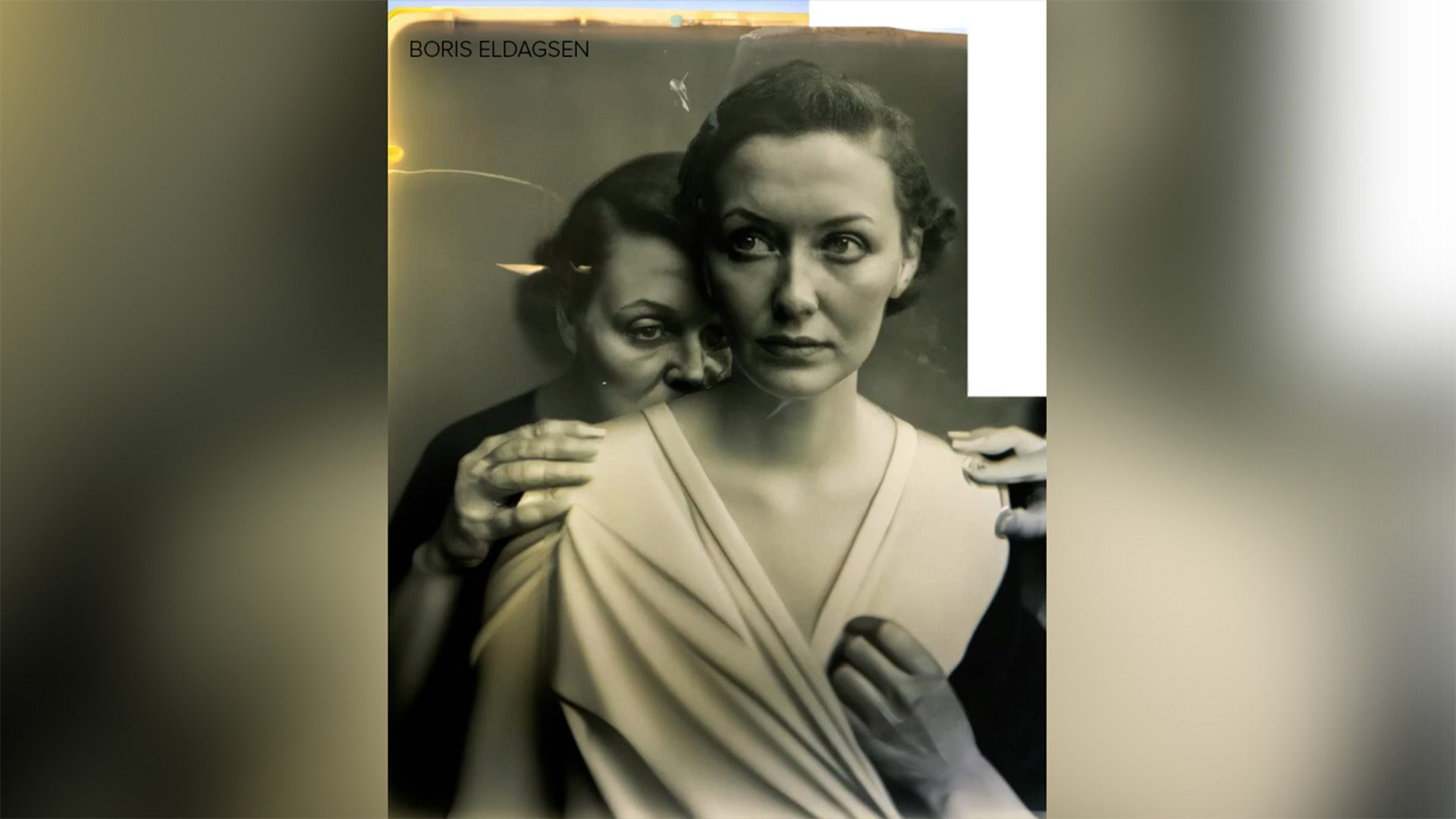

The rise of AI art tools threatens to put human artists out of work and many AI models are trained off of the work of human artists on the internet without their consent. Tools such as Glaze, however, are being developed in an attempt to trick AI art tools.

Glaze, developed by computer scientists at the University of Chicago, uses machine-learning algorithms to digitally cloak or hide artworks/images in a way that will thwart AI models’ attempts to understand the images.

For example, an artist can upload an image of their oil painting that has been run through Glaze. This will cause the AI model to read the painting as something like a charcoal drawing, despite it clearly being an oil painting to the human eye.

This tool allows artists to take a digital image of their artwork, run it through Glaze, “and afterward be confident that this piece of artwork will now look dramatically different to an AI model than it does to a human,” Ben Zhao, a professor of computer science at University of Chicago and one of the lead researchers on the Glaze project, told CNN.

According to Zhao, the first prototype of Glaze was released in March 2023 and it has already surpassed one million downloads. A free version of the tool was also released earlier this month.

Jon Lam, an artist based in California, stated that he uses Glaze for all of the images of his artwork that he shares online.

“We know that people are taking our high-resolution work and they are feeding it into machines that are competing in the same space that we are working in,” Lam stated. “So now we have to be a little bit more cautious and start thinking about ways to protect ourselves.”

Eveline Fröhlich, a visual artist based in Stuttgart, Germany, also spoke about how Glaze has helped to protect artists in the age of AI.

“It gave us some way to fight back,” Fröhlich stated “Up until that point, many of us felt so helpless with this situation, because there wasn’t really a good way to keep ourselves safe from it, so that was really the first thing that made me personally aware that: Yes, there is a point in pushing back.”

Though Glaze can help some of the AI-related problems facing artists today, Lam also states that more needs to be done in order to regulate how tech companies can take data from the internet for AI training.

“Right now, we’re seeing artists kind of being the canary in the coal mine,” Lam said. “But it’s really going to affect every industry.”

Zhao has also stated that, since releasing Glaze, his team has received an outpouring of messages from other fields such as fiction writers, musicians, voice actors, journalists, etc. inquiring about a version of Glaze for their field.

Another recent tool aimed to protect digital images from AI tools is called PhotoGuard. The tool was created by Hadi Salman, a researcher at the Massachusetts Institute of Technology, alongside MIT researchers from the Computer Science and Artificial Intelligence Laboratory.

“We are in the era of deepfakes,” Salman told CNN. “Anyone can now manipulate images and videos to make people actually do something that they are not doing.”

The prototype of the technology puts an invisible “immunization” over images which stops AI models from being able to manipulate or alter the picture. PhotoGuard works by adjusting the image’s pixels in a way that is imperceptible to the human eye. The aim of the tool is to protect photos that people upload online from any manipulation by AI models.

The tool uses two different “attack” methods to generate the perturbations. One is the “encoder” attack which targets the image’s latent representation in the AI model, causing the model to perceive the image as a random entity. The second is the “diffusion” attack which defines a target image and optimizes the perturbations to make the image resemble the target as closely as possible.

“But this imperceptible change is strong enough and it’s carefully crafted such that it actually breaks any attempts to manipulate this image by these AI models,” Salman added.