A startup founded by three former OpenAI researchers is using the technology development methods behind the creation of chatbots to build AI technology that can navigate in the physical world.

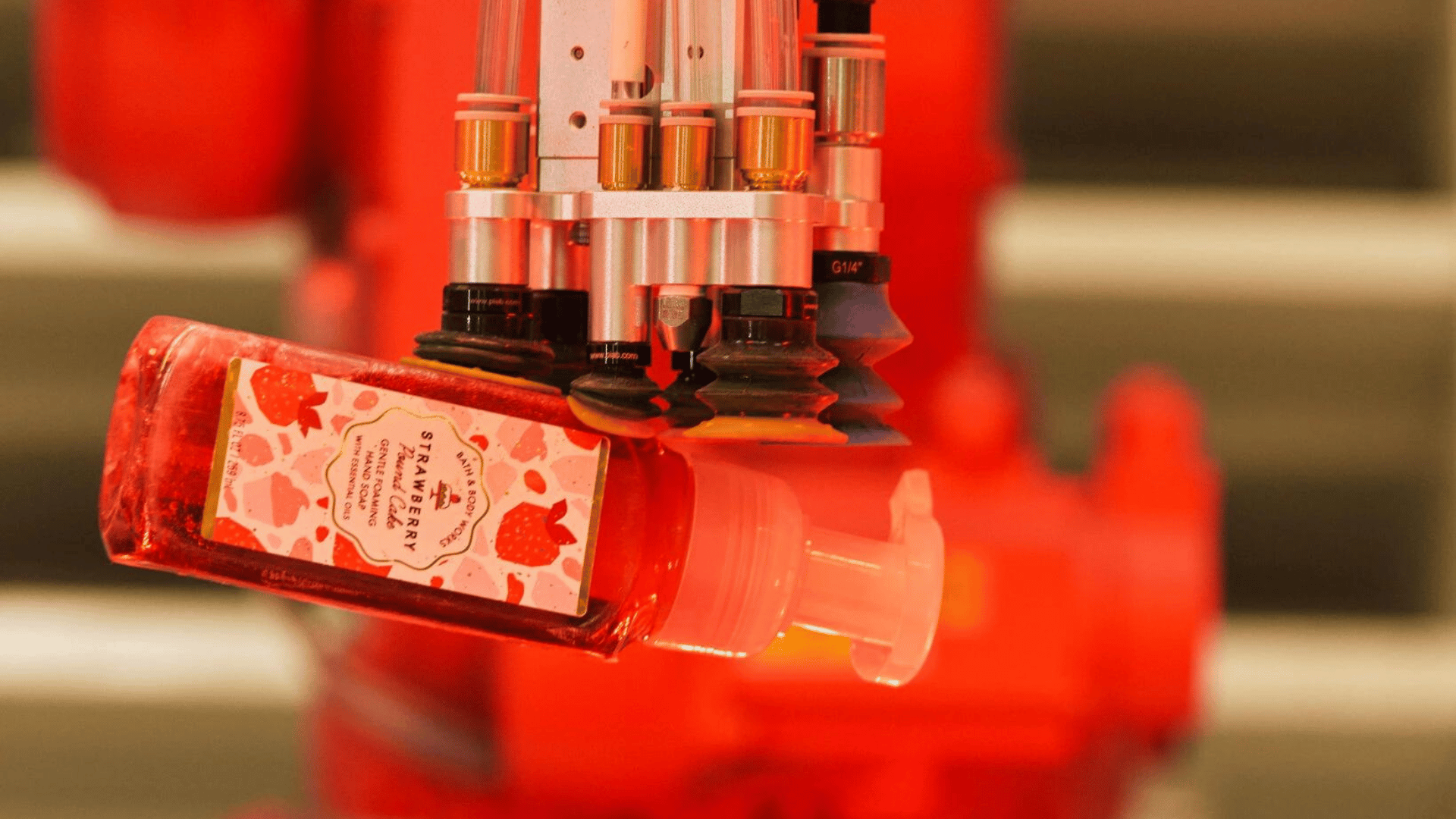

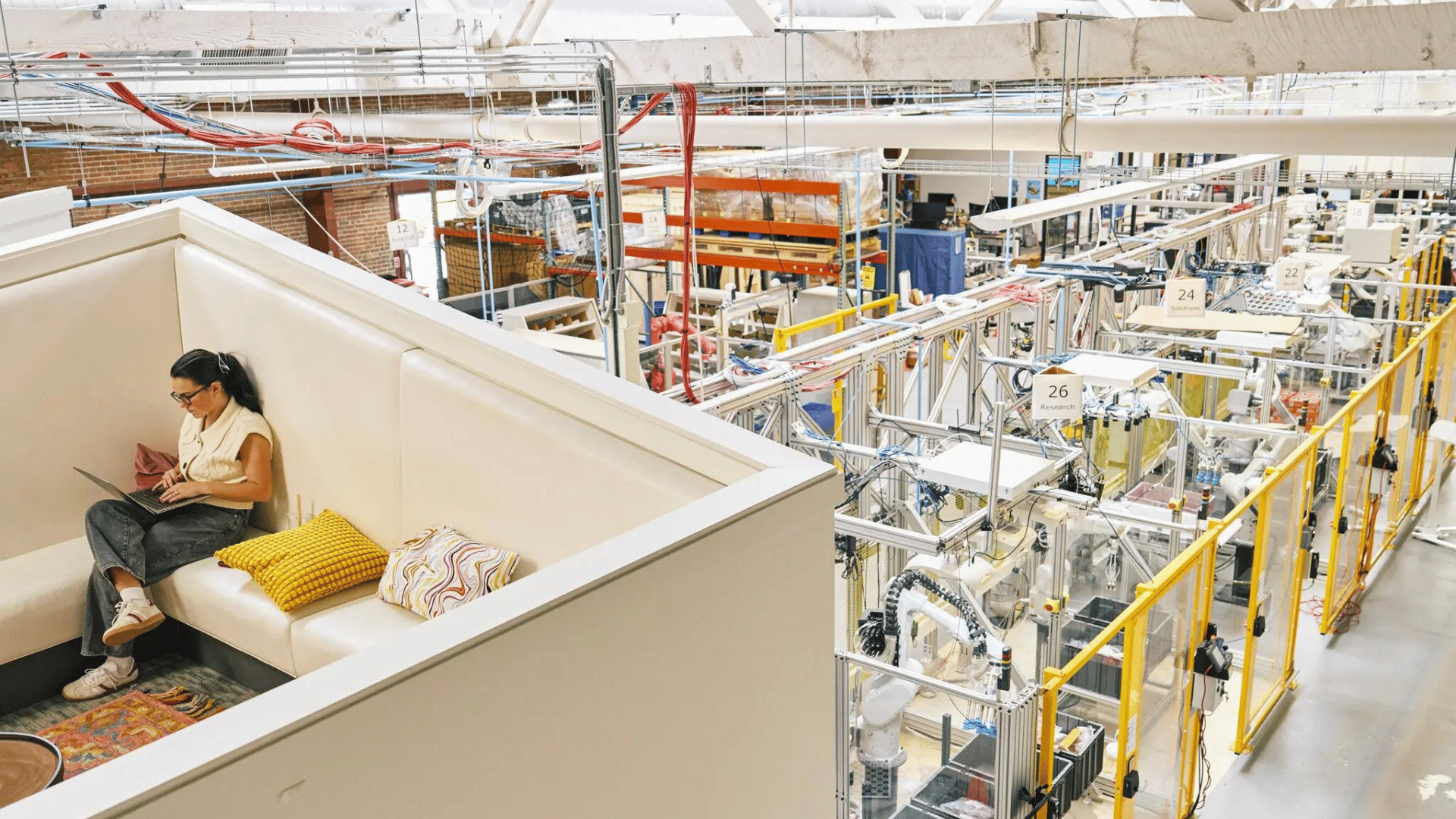

A robotics company called Covariant, which is headquartered in Emeryville, California, is creating ways for robots to pick up, move, and sort items as they’re moved through warehouses and distribution centers.

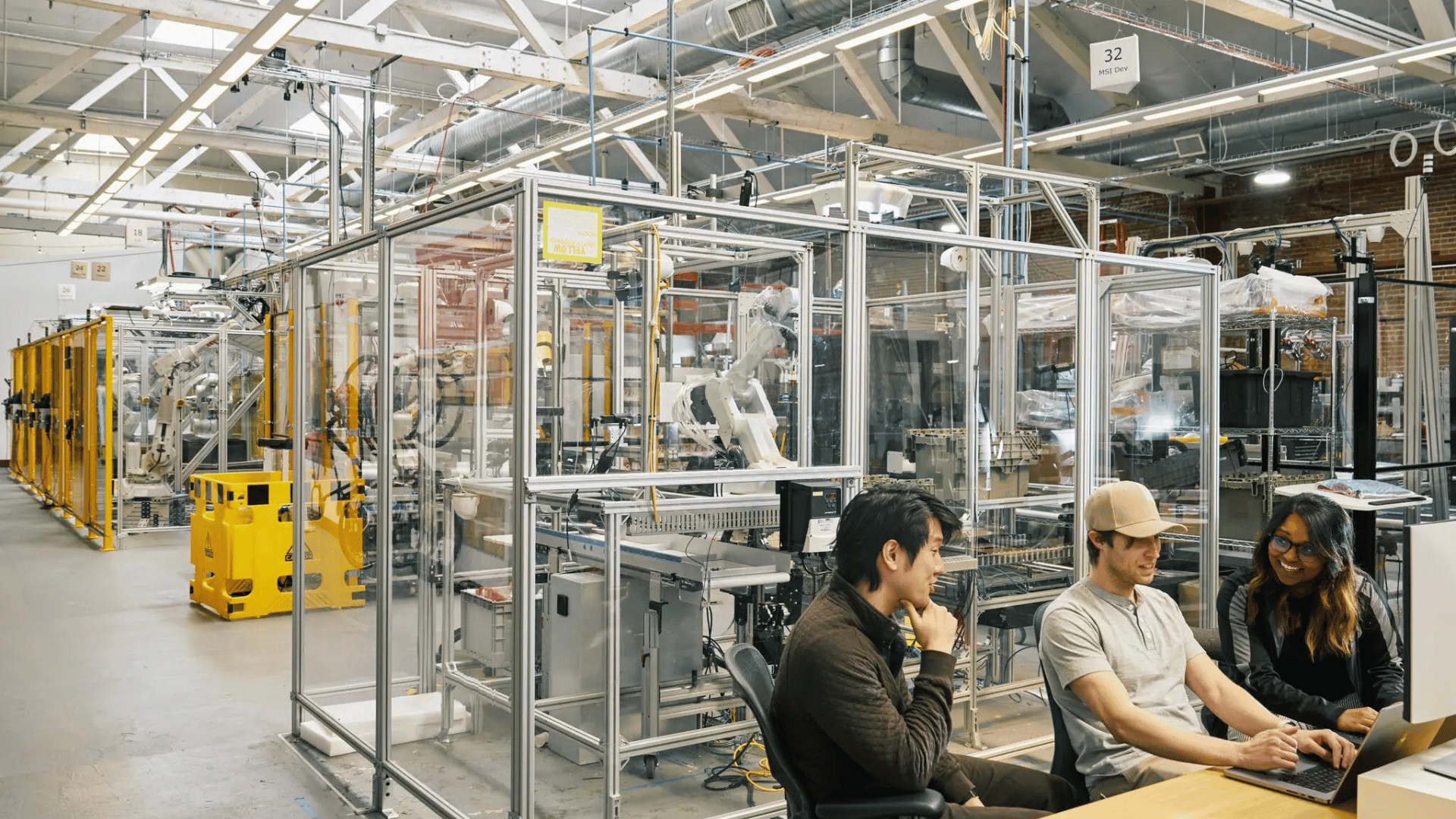

The goal of the project is to allow robots to gain a better understanding of what is going on around them and allow them to make choices about what to do next. The technology also gives robots an understanding of the English language, which allows people to talk with them in the same way they could talk to ChatGPT.

Similar to image generators and chatbots, this robotics technology learns its abilities through analyzing large amounts of digital data. Backed by $222 million in funding, Covariant plans to debut its new technology with warehouse robots to provide a blueprint for others to use this technology in manufacturing plants.

The company has spent years gathering data — from cameras and other sensors — that shows how these robots operate. By combining text data with different types of data like captioned videos and photos, the company has built AI technology that gives the robots a more comprehensive understanding of the world around them.

Explore Tomorrow's World from your inbox

Get the latest science, technology, and sustainability content delivered to your inbox.

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

“It ingests all kinds of data that matter to robots — that can help them understand the physical world and interact with it,” Dr. Chen said.

The technology can identify patterns in an array of images, text, and sensory data, which allows the robots to handle unexpected situations in the physical world. For example, it will know how to pick up a banana even if it hasn’t seen one in the physical world before.

It can also respond to English speech, much like a chatbot. For example, if you tell it to “pick up a banana,” it knows what that means or if you tell it to “pick up a yellow fruit,” it will understand that as well.

Called R.F.M. or Robotics Foundational Model, though the system is still being perfected and will make mistakes, the software is expected to improve the more that companies train it on increasingly large and varied collections of data. Pairing thousands of physical world examples with language through data, the robots will be able to become more nimble and handle unexpected situations.

“What is in the digital data can transfer into the real world,” Dr. Chen said.