Four months after releasing the groundbreaking ChatGPT, OpenAI announced its smarter and safer successor, GPT-4. According to the company, GPT-4 can solve more difficult problems with greater accuracy while exhibiting human-level performance on various benchmarks.

1. More Creative

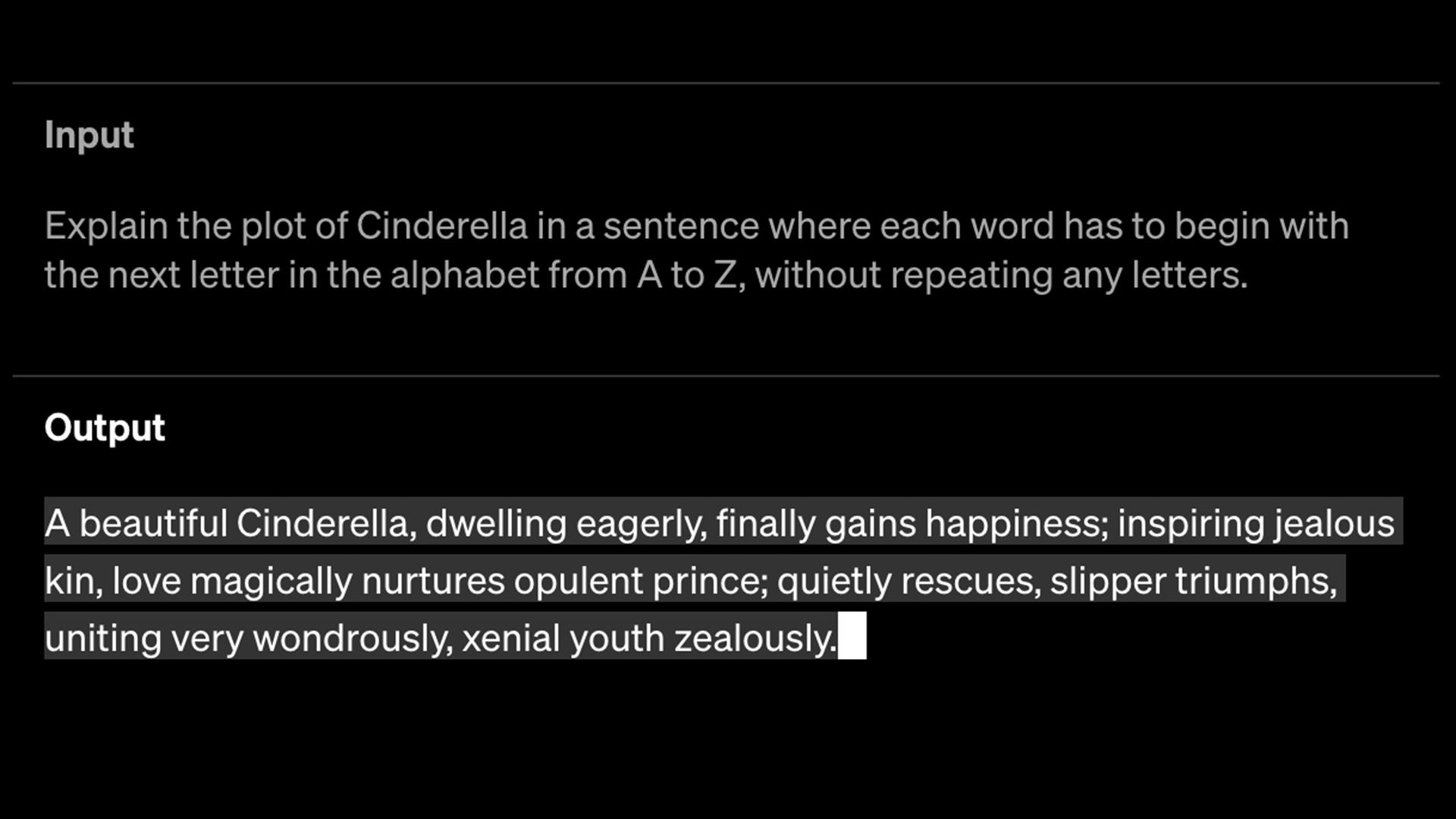

OpenAI describes GPT-4 to be more creative and collaborative than ChatGPT’s previous models. The new large language model can generate, edit, and iterate users on creative writing tasks with an improved ability to mimic users’ writing styles. Creative writing tasks that GPT-4 can perform include composing songs and writing screenplays.

Take a look at the above prompt, for example. The chatbot successfully completes a creative task as complicated as “Explain the plot of Cinderella in a sentence where each word has to begin with the next letter in the alphabet from A to Z, without repeating any letters.”

2. Longer Form Analysis

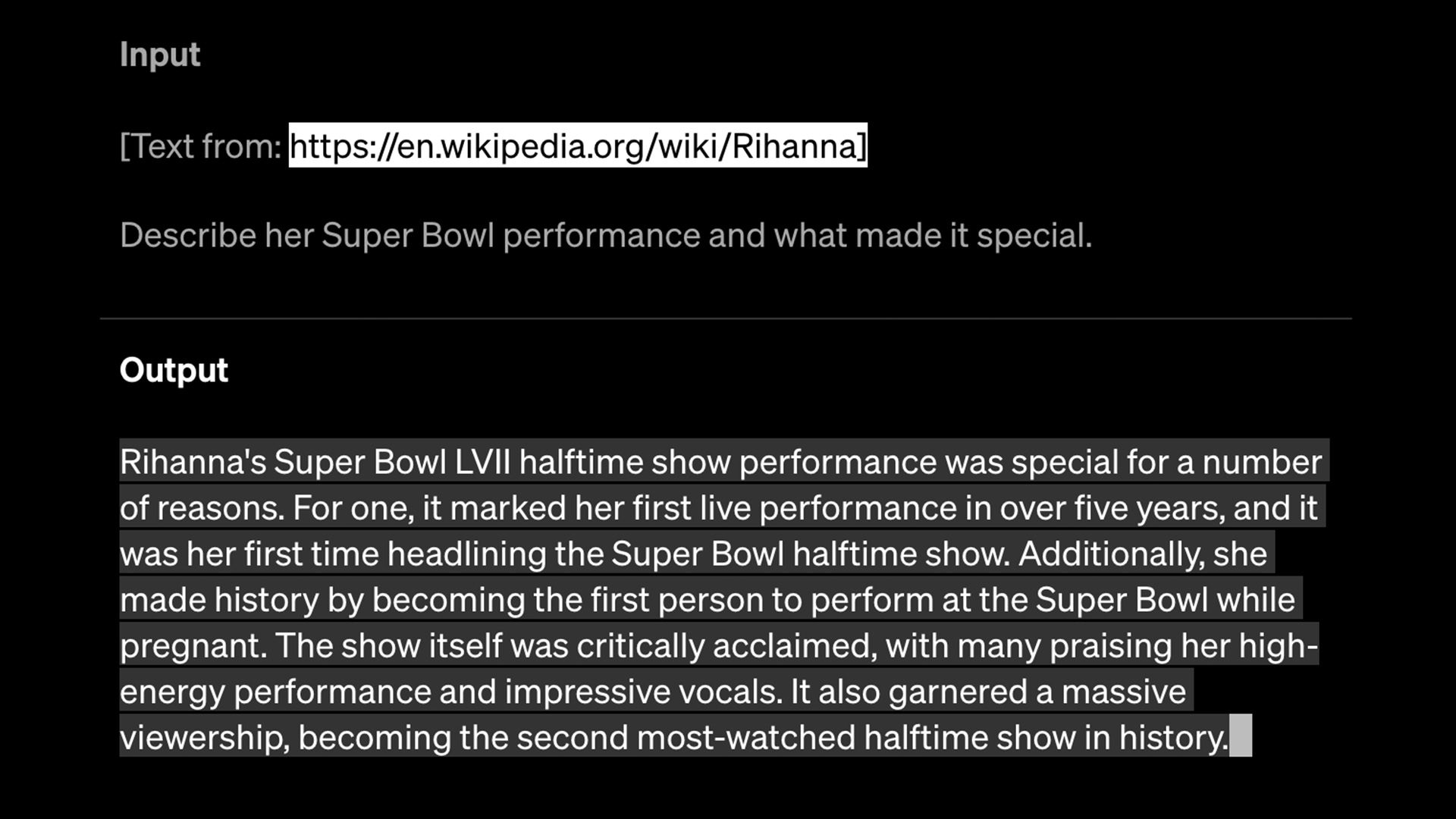

GPT-4 can handle over 25,000 words of text, while GPT-3.5 was limited to 8,000 words. This extended ability allows it to handle long-form content creation, extended conversations, and document search and analysis.

For example, the chatbot took Rihanna’s extensive Wikipedia page to analyze her Super Bowl performance and why it was special.

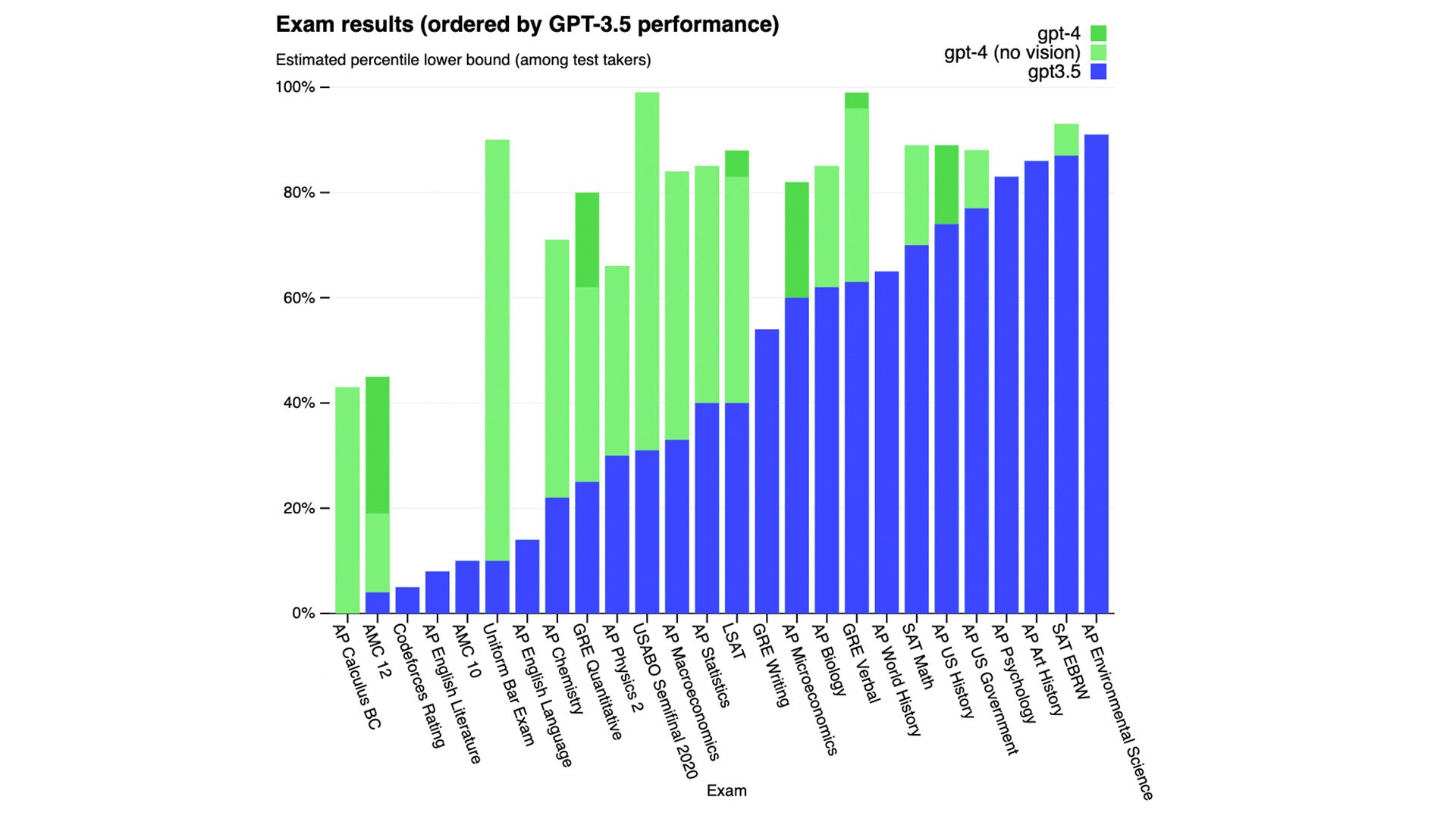

3. Increased Ability to Pass Standardized Tests

Whereas ChatGPT gained a great deal of attention from failing a variety of standardized exams, GPT-4 can score in higher approximate percentiles among test takers. For example, in the Uniform Bar Examination, GPT-4 can score in the 90th percentile while ChatGPT could score in the 10th.

OpenAI’s tests also suggest that the system could receive a perfect score of five on Advanced Placement exams in subjects like calculus, psychology, statistics, and history.

4. Multimodal

Unlike its predecessors, GPT-4 is a large multimodal model. This means that it can accept images as inputs and generate captions, classifications, and analyses based on the images’ components. This feature could be useful in various industries, such as healthcare where medical images could be analyzed and interpreted.

The feature could even help you decide what to make for dinner. After providing OpenAI with the above image of food items and the prompt “What can I make with these ingredients?”, the model answered multiple accurate options.

5. Safer

OpenAI reportedly spent six months making GPT-4 safer and more aligned. According to the company, GPT-4 is 82 percent less likely to respond to requests for disallowed content and 60 percent less likely to produce made-up responses than GPT-3.5.

The team even used GPT-4’s advanced reasoning and instruction-following capabilities to improve itself, asking it to “…create training data for model fine-tuning and iterate on classifiers across training, evaluations, and monitoring.”

GPT-4’s Limitations & Availability

Even though GPT-4 is OpenAI’s most advanced system, it still has many known limitations and areas of improvement. For example, the company disclosed that the chatbot has social biases, hallucinations, and adversarial prompts that need to be fixed. In the meantime, OpenAI encourages and facilitates “…transparency, user education, and wider AI literacy as society adopts these models.”

As of March 2023, GPT-4 is only available for ChatGPT Plus subscribers and as an API for developers to build applications and services.