A new system developed by MIT researchers enhances a robot’s ability to build and localize itself within a 3D map of its environment. This new system enables robots to perform all of these tasks using only visual data. Rescue robots face the critical challenge of efficiently processing massive amounts of image data to navigate complex environments in real time.

Simultaneous Localization and Mapping (SLAM) addresses this. The system is poised to accelerate robot deployment in high-stakes search-and-rescue operations.

Search and rescue robots must concurrently build a map of their surroundings and determine their position within that map. Machine learning models were developed to take on this task. However, the models’ inability to process more than a handful of images at a time is a major limitation. As a result, they become impractical for real-world scenarios where a robot must traverse a wide area and process thousands of images.

Real-Time 3D Robotic Mapping

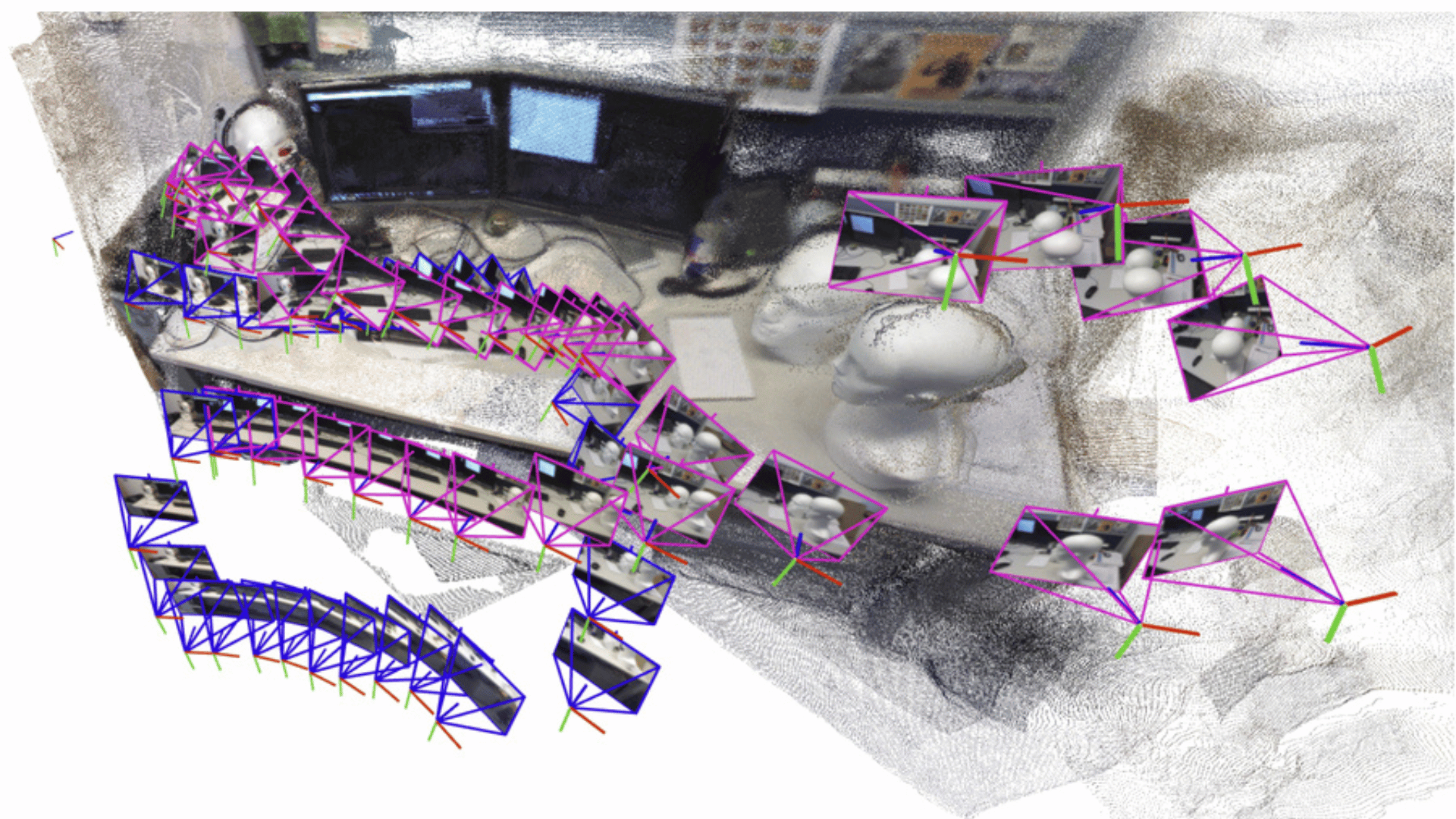

MIT researchers utilize artificial intelligence vision models and classical computer vision techniques to overcome this challenge. Their system is designed to process an arbitrary number of images by incrementally creating and aligning smaller “submaps” of the scene. Then, it seamlessly stitches together to form a complete 3D reconstruction.

The submap provides an overall system to recreate larger scenes much faster. This is the case even while the ML model itself processes only a few images at a time. Interestingly, initial attempts to simply “glue” the submaps failed. This prompted the lead researcher, Dominic Maggio, to revisit computer vision research from the 1980s and 1990s. The team discovered a challenge: the ML models introduced subtle deformations into the submaps. As a result, simple geometric rotations and translations were insufficient for accurate alignment.

Researchers, including Hyungtae Lim and Luca Carlone, developed a more flexible mathematical technique to address this ambiguity. Carlone said, “We need to make sure all the submaps are deformed in a consistent way so we can align them well with each other.”

“For robots to accomplish increasingly complex tasks, they need much more complex map representations of the world around them, ‘said Maggio. “We’ve shown that it is possible to generate an accurate 3D reconstruction in a matter of seconds with a tool that works out of the box.”

Implications for the mapping technology go beyond rescue operations. For example, researchers see the technology as beneficial for industrial robotics, warehouse logistics, and extended reality (XR) applications, such as VR headsets.