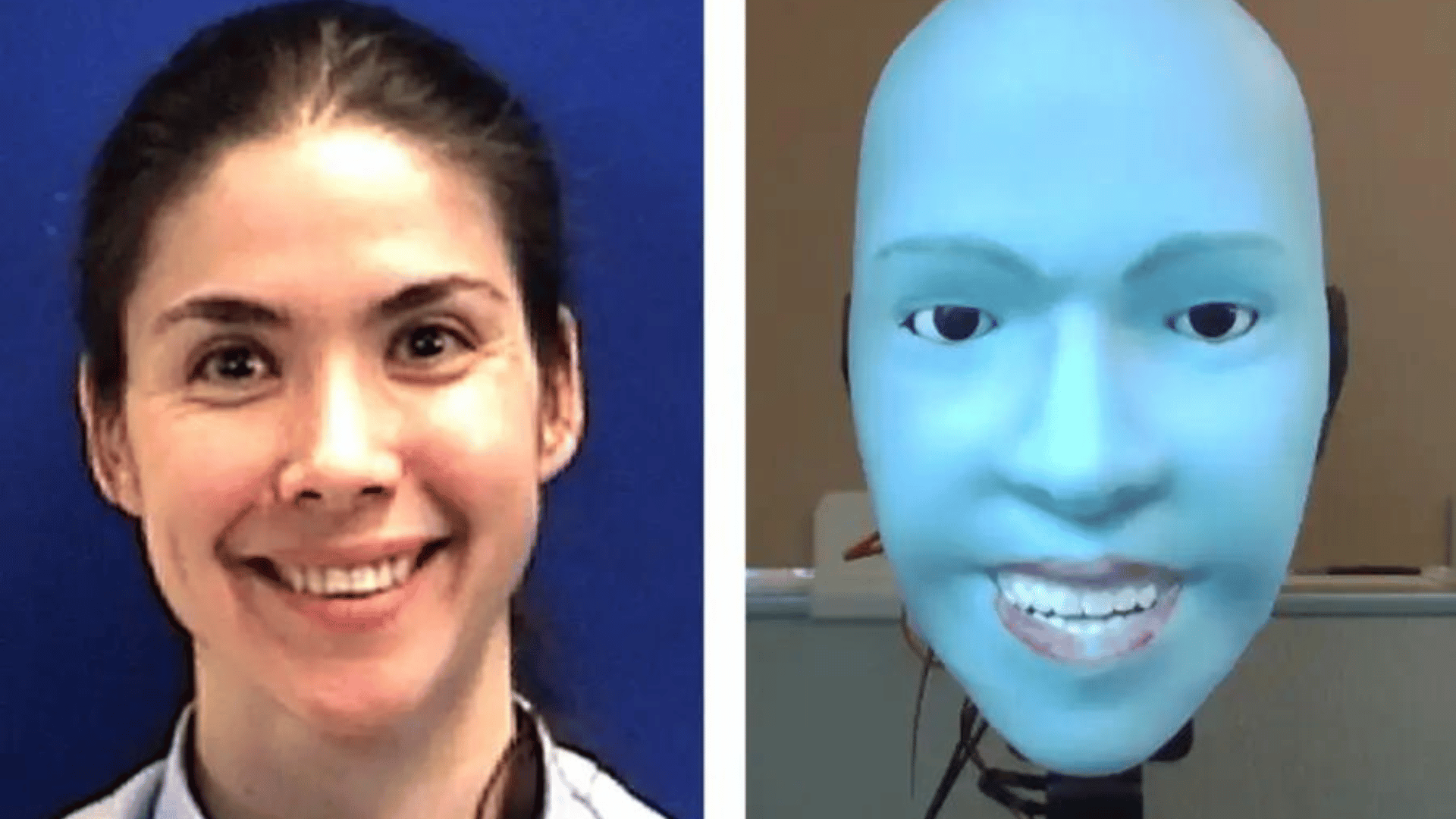

Researchers have created a humanoid robot that can predict whether a person will smile a second before they do and mimic the smile on its own face.

Though artificial intelligence can now mimic human language, interactions with physical robots aren’t as impressive because robots can’t replicate the complex, non-verbal cues and mannerisms required in human communication.

Hod Lipson and his colleagues at Columbia University in New York have created a robot called Emo that uses AI models and high-resolution cameras to predict and replicate people’s facial expressions. The robot can anticipate whether someone will smile approximately 0.9 seconds before they do, and smile itself in sync.

“I’m a jaded roboticist, but I smile back at this robot,” says Lipson.

Explore Tomorrow's World from your inbox

Get the latest science, technology, and sustainability content delivered to your inbox.

I understand that by providing my email address, I agree to receive emails from Tomorrow's World Today. I understand that I may opt out of receiving such communications at any time.

Emo consists of a face with cameras in its eyes and flexible plastic skin powered by 24 separate motors attached by magnets. It uses two neural networks: one to view human faces and predict their reactions and another to determine how to produce the expressions on its own face.

The first network was trained using YouTube videos of people making faces and the second was trained by having the robot watch itself make faces on a live camera feed.

“It learns what its face is going to look like when it’s going to pull all these muscles,” says Lipson. “It’s sort of like a person in front of a mirror, when even if you close your eyes and smile, you know what your face is going to look like.”

Lipson and the team of researchers ultimately hope that Emo’s technology will improve human-robot interactions, making them feel more lifelike. They plan to increase the range of expressions that the robot is capable of and train it to make expressions in response to what people are saying, rather than simply mimicking a person.