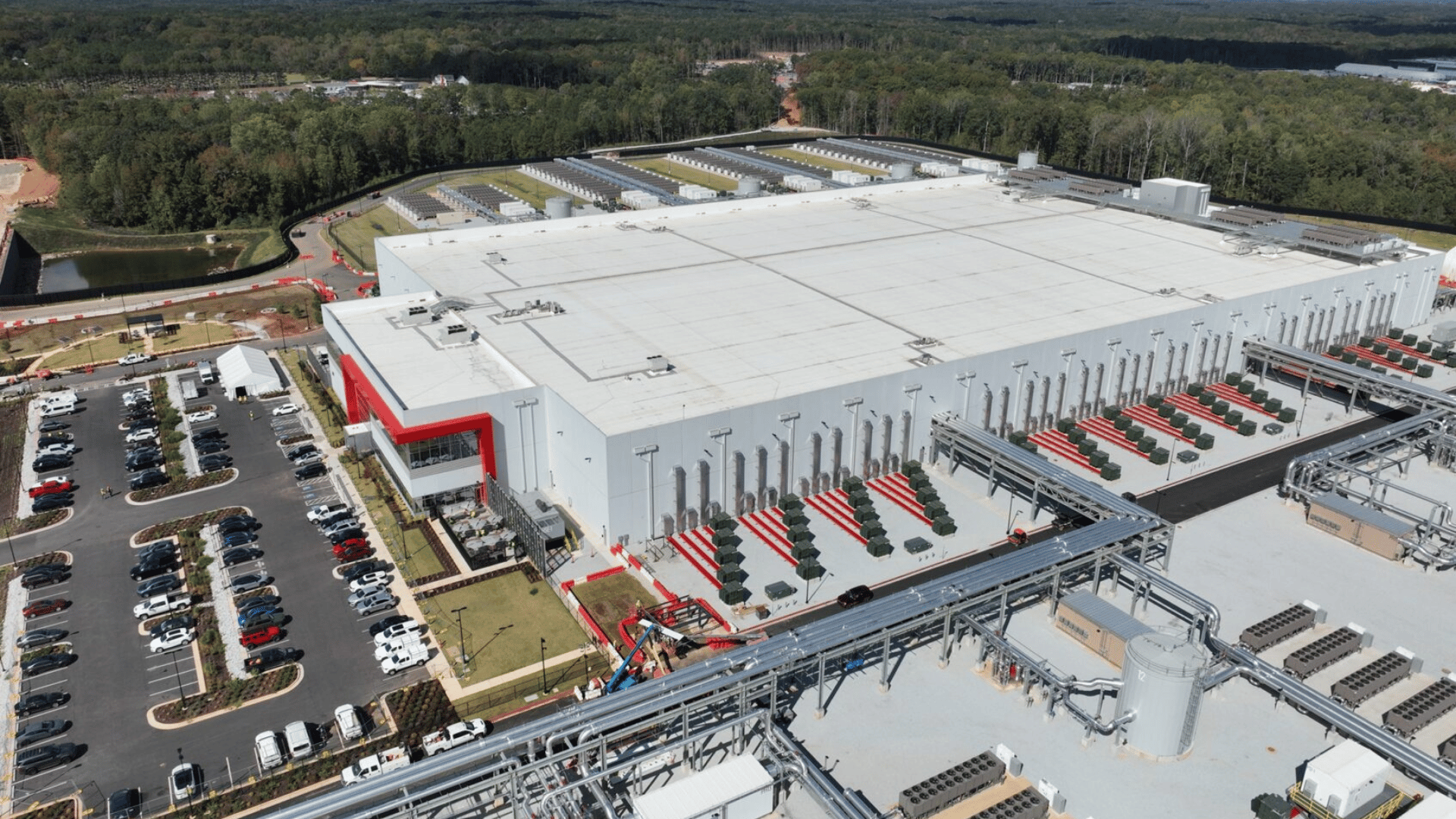

A new phase in artificial intelligence infrastructure comes from the hands of tech giant Microsoft. The company launched its “Fairater” family of AI datacenters that interconnect to create an “AI superfactory.” Microsoft’s newest site is located in Atlanta, the second of its kind, which operates in conjunction with the recently announced Wisconsin facility.

This marks the beginning of a push to transition from isolated facilities to a distributed network designed to accelerate AI breakthroughs.

AI Superfactory

There is nothing traditional about the Fairwater datacenters. “The reason we call this an AI superfactory is it’s running one complex job across millions of pieces of hardware,” said Alistair Speirs, Microsoft general manager. “And it’s not just a single site training an AI model, it’s a network of sites supporting that one job.”

By unifying the datacenters, it allows the network to train new AI models in weeks, rather than months.

The AI “superfactory” is powered by its dedicated high-speed network, known as the AI Wide Area Network. This network utilizes fiber-optic cables, which enable data to travel between sites at nearly the speed of light. Microsoft deployed 120,000 miles of cable in one year. On the interior, the datacenters have a two-story architecture that packs greater GPU density. Additionally, the architecture minimizes delays between hundreds of thousands of advanced GPUs.

It also incorporates advanced liquid cooling that consumes “almost zero water in its operations.”

“Leading in AI isn’t just about adding more GPUs – it’s about building the infrastructure that makes them work together as one system,” said Microsoft executive vice president of Cloud + AI Scott Guthrie. Microsoft engineers believe that this type of infrastructure is necessary due to the computational demands of modern AI models.

Furthermore, the distributed approach is deemed necessary by the Microsoft team because of the sheer scale required for training models with trillions of parameters.

Mark Russinovich, CTO, deputy CISO, and technical fellow for Microsoft Azure, explained, “You really need to make it so that you can train across multiple regions, and nobody’s really run into that problem yet because they haven’t gotten to the scale we’re at now.”