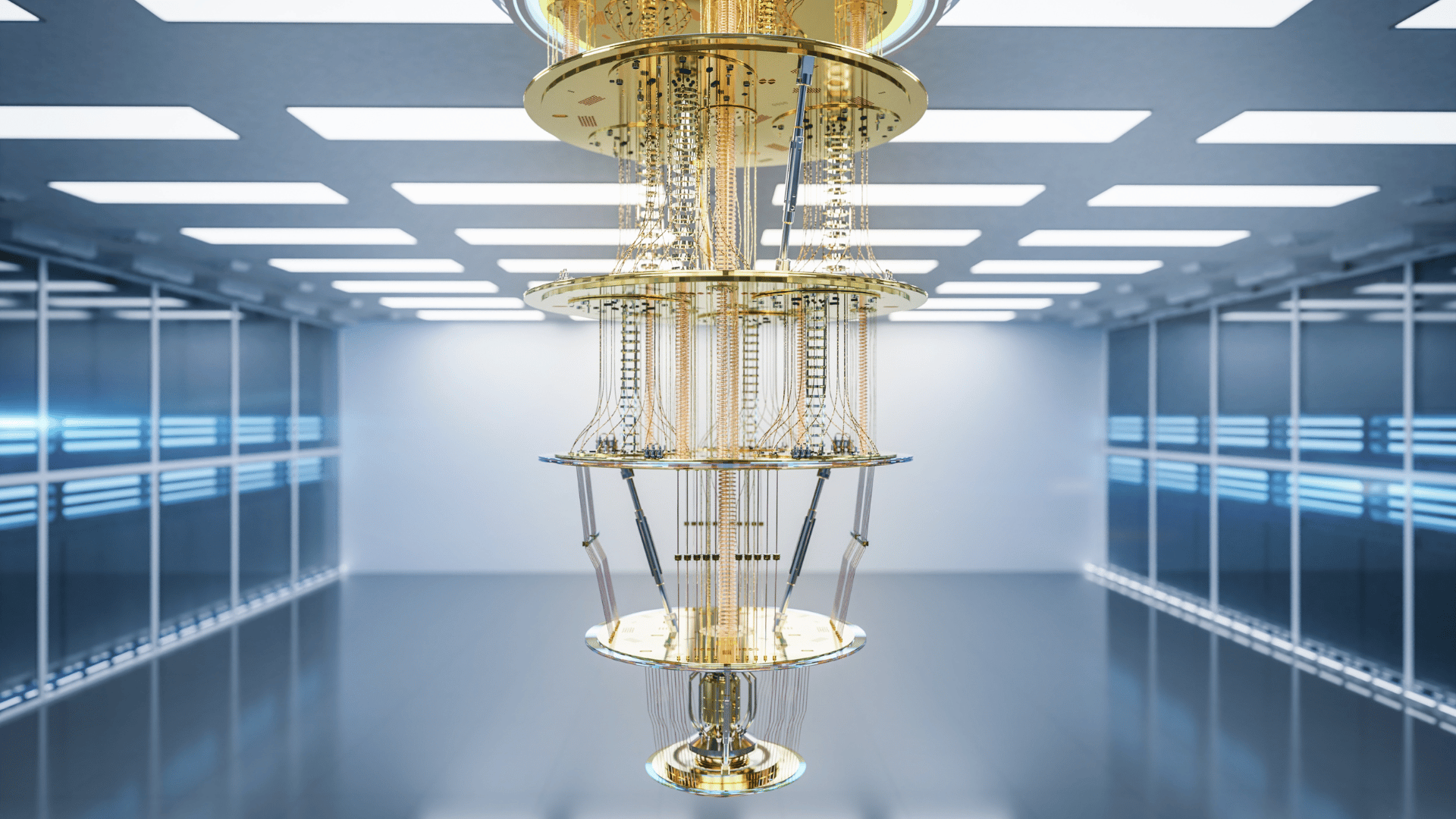

A new class of highly efficient and scalable error correction codes could lead to a major breakthrough in quantum computing. Behind the development is the Institute of Science Tokyo (Science Tokyo). Scientists reportedly developed novel codes with performance capabilities approaching a benchmark for the maximum information transmission rate over a quantum channel, or the theoretical hashing bound.

The study’s lead researchers, Associate Professor Kenta Kasai and Master’s student Daiki Kawamoto, address the largest bottlenecks in large-scale quantum computing: the high rate of inherent errors known as noise.

These “noises” increase with the number of qubits. Current quantum computers struggle to scale due to the limitations of current error correction methods. According to researchers, these methods are resource-intensive and require a “massive” number of physical qubits to protect only a few reliable logical qubits.

Researchers say the new codes can pave the way for large-scale fault-tolerant quantum computing because of their ability to handle thousands of qubits. A leap like this is essential for quantum chemistry and optimization problems, which demand millions of logical qubits.

Overcoming Quantum Obstacles

The research team had to overcome long-standing challenges in the design of quantum error correction codes. For example, researchers struggled with low coding rates and performance stagnation due to error floors.

Their new codes, detailed in the journal npj Quantum Information, are based on constructing protograph LDPC codes and introducing a novel technique using affine permutations. Unlike conventional binary LDPC codes, these are defined over non-binary finite fields, allowing them to carry more information and improve decoding performance.

Researchers then transformed these codes into Calderbank-Shor-Steane (CSS) codes, a well-known family of quantum error correction codes. Crucially, they also developed a new, efficient decoding method that uses the sum-product algorithm to simultaneously handle both bit-flip (X) and phase-flip (Z) errors—the two fundamental types of errors in quantum computing.

Efficiency and Scalability

Researchers call their results from large-scale numerical simulations remarkable. They say the results demonstrated high decoding performance with low frame error rates, even for codes with thousands of qubits. According to the team, its a significant step towards the theoretical hashing bound.

“Our quantum error-correcting code has a greater than code rate, targeting hundreds of thousands of logical qubits,” explains Kasai. “Moreover, its decoding complexity is proportional to the number of physical qubits, which is a significant achievement for quantum scalability.”

According to Kasai, this proportional decoding complexity makes the codes highly efficient for scaling up.

Kasai added, “This will significantly improve the reliability and scalability of quantum computers for practical applications while also paving the way for future research.”